[ad_1]

Of the practically two billion folks residing in nations which are holding elections this yr, some have already solid their ballots. Elections held in Indonesia and Pakistan in February, amongst different nations, provide an early glimpse of what’s in retailer as synthetic intelligence (AI) applied sciences steadily intrude into the electoral area. The rising image is deeply worrying, and the issues are a lot broader than simply misinformation or the proliferation of faux information.

As the previous director of the Machine Studying, Ethics, Transparency and Accountability (META) staff at Twitter (earlier than it turned X), I can attest to the huge ongoing efforts to establish and halt election-related disinformation enabled by generative AI (GAI). However makes use of of AI by politicians and political events for functions that aren’t overtly malicious additionally elevate deep moral issues.

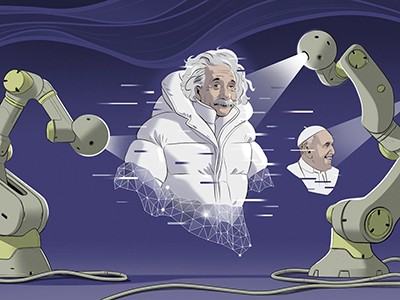

GAI is ushering in an period of ‘softfakes’. These are photographs, movies or audio clips which are doctored to make a politician appear extra interesting. Whereas deepfakes (digitally altered visible media) and low cost fakes (low-quality altered media) are related to malicious actors, softfakes are sometimes made by the candidate’s marketing campaign staff itself.

The best way to cease AI deepfakes from sinking society — and science

In Indonesia’s presidential election, for instance, successful candidate Prabowo Subianto relied closely on GAI, creating and selling cartoonish avatars to rebrand himself as gemoy, which implies ‘cute and cuddly’. This AI-powered makeover was a part of a broader try and enchantment to youthful voters and displace allegations linking him to human-rights abuses throughout his stint as a high-ranking military officer. The BBC dubbed him “Indonesia’s ‘cuddly grandpa’ with a bloody previous”. Moreover, intelligent use of deepfakes, together with an AI ‘get out the vote’ digital resurrection of Indonesia’s deceased former president Suharto by a bunch backing Subianto, is assumed by some to have contributed to his shocking win.

Nighat Dad, the founding father of the analysis and advocacy group Digital Rights Basis, based mostly in Lahore, Pakistan, documented how candidates in Bangladesh and Pakistan used GAI of their campaigns, together with AI-written articles penned below the candidate’s identify. South and southeast Asian elections have been flooded with deepfake movies of candidates talking in quite a few languages, singing nostalgic songs and extra — humanizing them in a means that the candidates themselves couldn’t do in actuality.

What must be accomplished? International tips may be thought of across the acceptable use of GAI in elections, however what ought to they be? There have already been some makes an attempt. The US Federal Communications Fee, for example, banned using AI-generated voices in telephone calls, often called robocalls. Companies reminiscent of Meta have launched watermarks — a label or embedded code added to a picture or video — to flag manipulated media.

However these are blunt and infrequently voluntary measures. Guidelines have to be put in place all alongside the communications pipeline — from the businesses that generate AI content material to the social-media platforms that distribute them.

What the EU’s robust AI legislation means for analysis and ChatGPT

Content material-generation firms ought to take a better have a look at defining how watermarks must be used. Watermarking could be as apparent as a stamp, or as advanced as embedded metadata to be picked up by content material distributors.

Firms that distribute content material ought to put in place techniques and sources to watch not simply misinformation, but additionally election-destabilizing softfakes which are launched by means of official, candidate-endorsed channels. When candidates don’t adhere to watermarking — none of those practices are but obligatory — social-media firms can flag and supply acceptable alerts to viewers. Media shops can and may have clear insurance policies on softfakes. They may, for instance, permit a deepfake through which a victory speech is translated to a number of languages, however disallow deepfakes of deceased politicians supporting candidates.

Election regulatory and authorities our bodies ought to carefully study the rise of firms which are participating within the growth of faux media. Textual content-to-speech and voice-emulation software program from Eleven Labs, an AI firm based mostly in New York Metropolis, was deployed to generate robocalls that attempted to dissuade voters from voting for US President Joe Biden within the New Hampshire main elections in January, and to create the softfakes of former Pakistani prime minister Imran Khan throughout his 2024 marketing campaign outreach from a jail cell. Slightly than go softfake regulation on firms, which might stifle allowable makes use of reminiscent of parody, I as an alternative counsel establishing election requirements on GAI use. There’s a lengthy historical past of legal guidelines that restrict when, how and the place candidates can marketing campaign, and what they’re allowed to say.

Residents have a component to play as nicely. Everyone knows that you simply can’t belief what you learn on the Web. Now, we should develop the reflexes to not solely spot altered media, but additionally to keep away from the emotional urge to suppose that candidates’ softfakes are ‘humorous’ or ‘cute’. The intent of those isn’t to mislead you — they’re usually clearly AI generated. The objective is to make the candidate likeable.

Softfakes are already swaying elections in among the largest democracies on the earth. We might be smart to study and adapt as the continuing yr of democracy, with some 70 elections, unfolds over the following few months.

Competing Pursuits

The creator declares no competing pursuits.

[ad_2]

Supply hyperlink