[ad_1]

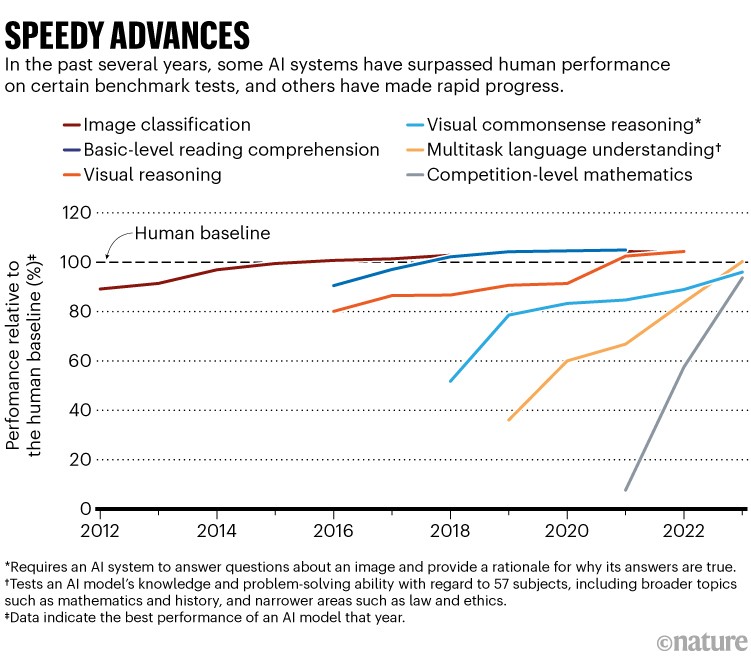

Synthetic intelligence (AI) methods, such because the chatbot ChatGPT, have change into so superior that they now very practically match or exceed human efficiency in duties together with studying comprehension, picture classification and competition-level arithmetic, in keeping with a brand new report (see ‘Speedy advances’). Fast progress within the growth of those methods additionally implies that many widespread benchmarks and assessments for assessing them are rapidly turning into out of date.

These are just some of the top-line findings from the Synthetic Intelligence Index Report 2024, which was revealed on 15 April by the Institute for Human-Centered Synthetic Intelligence at Stanford College in California. The report charts the meteoric progress in machine-learning methods over the previous decade.

Particularly, the report says, new methods of assessing AI — for instance, evaluating their efficiency on complicated duties, similar to abstraction and reasoning — are increasingly vital. “A decade in the past, benchmarks would serve the group for five–10 years” whereas now they usually change into irrelevant in just some years, says Nestor Maslej, a social scientist at Stanford and editor-in-chief of the AI Index. “The tempo of achieve has been startlingly fast.”

Supply: Synthetic Intelligence Index Report 2024.

Stanford’s annual AI Index, first revealed in 2017, is compiled by a gaggle of educational and trade specialists to evaluate the sector’s technical capabilities, prices, ethics and extra — with an eye fixed in direction of informing researchers, policymakers and the general public. This yr’s report, which is greater than 400 pages lengthy and was copy-edited and tightened with the help of AI instruments, notes that AI-related regulation in america is sharply rising. However the lack of standardized assessments for accountable use of AI makes it tough to check methods by way of the dangers that they pose.

The rising use of AI in science can also be highlighted on this yr’s version: for the primary time, it dedicates a complete chapter to science purposes, highlighting initiatives together with Graph Networks for Supplies Exploration (GNoME), a undertaking from Google DeepMind that goals to assist chemists uncover supplies, and GraphCast, one other DeepMind instrument, which does fast climate forecasting.

Rising up

The present AI increase — constructed on neural networks and machine-learning algorithms — dates again to the early 2010s. The sector has since quickly expanded. For instance, the variety of AI coding initiatives on GitHub, a standard platform for sharing code, elevated from about 800 in 2011 to 1.8 million final yr. And journal publications about AI roughly tripled over this era, the report says.

ChatGPT broke the Turing check — the race is on for brand new methods to evaluate AI

A lot of the cutting-edge work on AI is being executed in trade: that sector produced 51 notable machine-learning methods final yr, whereas educational researchers contributed 15. “Educational work is shifting to analysing the fashions popping out of corporations — doing a deeper dive into their weaknesses,” says Raymond Mooney, director of the AI Lab on the College of Texas at Austin, who wasn’t concerned within the report.

That features growing harder assessments to evaluate the visible, mathematical and even moral-reasoning capabilities of huge language fashions (LLMs), which energy chatbots. One of many newest assessments is the Graduate-Degree Google-Proof Q&A Benchmark (GPQA)1, developed final yr by a workforce together with machine-learning researcher David Rein at New York College.

The GPQA, consisting of greater than 400 multiple-choice questions, is hard: PhD-level students may accurately reply questions of their discipline 65% of the time. The identical students, when making an attempt to reply questions exterior their discipline, scored solely 34%, regardless of gaining access to the Web in the course of the check (randomly deciding on solutions would yield a rating of 25%). As of final yr, AI methods scored about 30–40%. This yr, Rein says, Claude 3 — the newest chatbot launched by AI firm Anthropic, primarily based in San Francisco, California — scored about 60%. “The speed of progress is fairly surprising to lots of people, me included,” Rein provides. “It’s fairly tough to make a benchmark that survives for quite a lot of years.”

Value of enterprise

As efficiency is skyrocketing, so are prices. GPT-4 — the LLM that powers ChatGPT and that was launched in March 2023 by San Francisco-based agency OpenAI — reportedly price US$78 million to coach. Google’s chatbot Gemini Extremely, launched in December, price $191 million. Many individuals are involved concerning the vitality use of those methods, in addition to the quantity of water wanted to chill the info centres that assist to run them2. “These methods are spectacular, however they’re additionally very inefficient,” Maslej says.

Prices and vitality use for AI fashions are excessive largely as a result of one of many important methods to make present methods higher is to make them greater. This implies coaching them on ever-larger shares of textual content and pictures. The AI Index notes that some researchers now fear about working out of coaching information. Final yr, in keeping with the report, the non-profit analysis institute Epoch projected that we’d exhaust provides of high-quality language information as quickly as this yr. (Nonetheless, the institute’s most up-to-date evaluation means that 2028 is a greater estimate.)

AI ‘breakthrough’: neural internet has human-like capability to generalize language

Moral issues about how AI is constructed and used are additionally mounting. “Individuals are far more nervous about AI than ever earlier than, each in america and throughout the globe,” says Maslej, who sees indicators of a rising worldwide divide. “There are actually some nations very enthusiastic about AI, and others which can be very pessimistic.”

In america, the report notes a steep rise in regulatory curiosity. In 2016, there was only one US regulation that talked about AI; final yr, there have been 25. “After 2022, there’s a large spike within the variety of AI-related payments which have been proposed” by policymakers, Maslej says.

Regulatory motion is more and more centered on selling accountable AI use. Though benchmarks are rising that may rating metrics similar to an AI instrument’s truthfulness, bias and even likability, not everyone seems to be utilizing the identical fashions, Maslej says, which makes cross-comparisons onerous. “This can be a actually essential matter,” he says. “We have to convey the group collectively on this.”

[ad_2]

Supply hyperlink