[ad_1]

OctoAI (previously often called OctoML), introduced the launch of OctoStack, its new end-to-end resolution for deploying generative AI fashions in an organization’s non-public cloud, be that on-premises or in a digital non-public cloud from one of many main distributors, together with AWS, Google, Microsoft and Azure, in addition to CoreWeave, Lambda Labs, Snowflake and others.

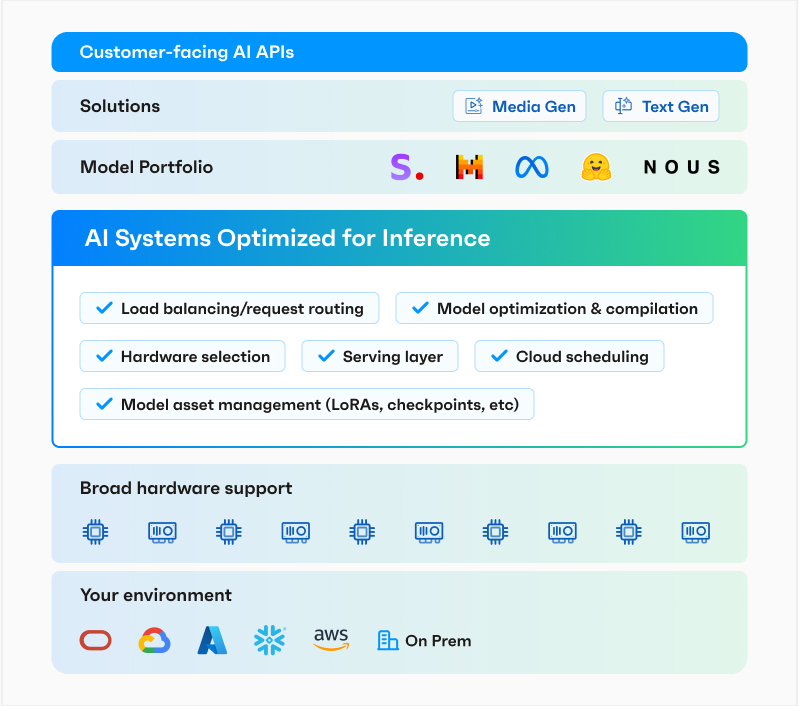

In its early days, OctoAI centered virtually completely on optimizing fashions to run extra successfully. Primarily based on the Apache TVM machine studying compiler framework, the corporate then launched its TVM-as-a-Service platform and, over time, expanded that into a totally fledged model-serving providing that mixed its optimization chops with a DevOps platform. With the rise of generative AI, the group then launched the totally managed OctoAI platform to assist its customers serve and fine-tune present fashions. OctoStack, at its core, is that OctoAI platform, however for personal deployments.

Picture Credit: OctoAI

OctoAI CEO and co-founder Luis Ceze informed me the corporate has over 25,000 builders on the platform and tons of of paying clients who use it in manufacturing. A whole lot of these firms, Ceze mentioned, are GenAI-native firms. The market of conventional enterprises eager to undertake generative AI is considerably bigger, although, so it’s perhaps no shock that OctoAI is now going after them as properly with OctoStack.

“One factor that grew to become clear is that, because the enterprise market goes from experimentation final 12 months to deployments, one, all of them are wanting round as a result of they’re nervous about sending knowledge over an API,” Ceze mentioned. “Two: numerous them have additionally dedicated their very own compute, so why am I going to purchase an API once I have already got my very own compute? And three, it doesn’t matter what certifications you get and the way massive of a reputation you’ve gotten, they really feel like their AI is treasured like their knowledge they usually don’t wish to ship it over. So there’s this actually clear want within the enterprise to have the deployment beneath your management.”

Ceze famous that the group had been constructing out the structure to supply each its SaaS and hosted platform for some time now. And whereas the SaaS platform is optimized for Nvidia {hardware}, OctoStack can assist a far wider vary of {hardware}, together with AMD GPUs and AWS’s Inferentia accelerator, which in flip makes the optimization problem fairly a bit tougher (whereas additionally taking part in to OctoAI’s strengths).

Deploying OctoStack ought to be simple for many enterprises, as OctoAI delivers the platform with read-to-go containers and their related Helm charts for deployments. For builders, the API stays the identical, regardless of whether or not they’re focusing on the SaaS product or OctoAI of their non-public cloud.

The canonical enterprise use case stays utilizing textual content summarization and RAG to permit customers to talk with their inner paperwork, however some firms are additionally fine-tuning these fashions on their inner code bases to run their very own code technology fashions (much like what GitHub now provides to Copilot Enterprise customers).

For a lot of enterprises, with the ability to try this in a safe setting that’s strictly beneath their management is what now permits them to place these applied sciences into manufacturing for his or her staff and clients.

“For our performance- and security-sensitive use case, it’s crucial that the fashions which course of calls knowledge run in an setting that gives flexibility, scale and safety,” mentioned Dali Kaafar, founder and CEO at Apate AI. “OctoStack lets us simply and effectively run the personalized fashions we want, inside environments that we select, and ship the size our clients require.”

[ad_2]

Supply hyperlink